Open source projects

ROLEXR Prototyper

DURATION2019–2025

TECHUnity Engine, C#

A collection of open source projects I've worked on both professionally and personally. Most can be found on GitHub and were created to solve specific problems, streamline workflows and provide useful resources for the developer community.

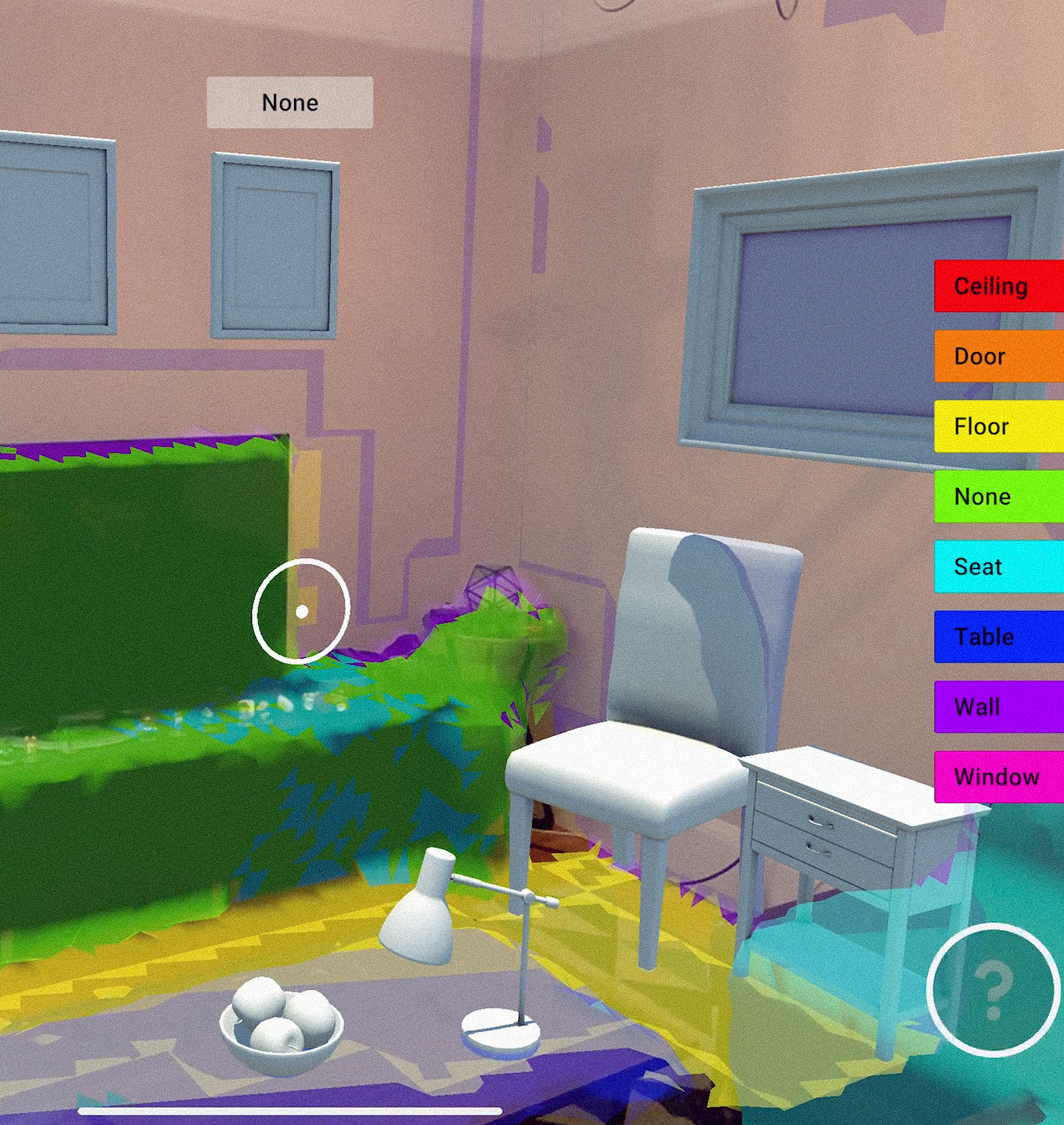

ARFoundation meshing placement

GOAL

Provide a sample app that shows developers how to utilize the latest ARKit API for real-time mesh generation and semantic tagging

CHALLENGES

• Brand new feature with sparse documentation and samples

• Apple to Unity translation wrapper exposed data in a convoluted way

• Difficult to debug runtime data

An open source sample app that uses ARKit LiDAR sensor to generate a real-time mesh and then leverage semantic tagging for interactive visualization and placement. Available as part of the AR Foundation Demos repository.

Development process

PROCESS

• Learn API and understand the data

• Ideate on unique use cases

• Build and iterate prototype

• Polish demo and publish app

Understanding API & data

Scene geometry

• Topological map of the environment

• Semantic classification of non-planar surfaces

• Object occlusion

• Physics interactions

AR mesh classification

.ceiling

.door

.floor

.none

.seat

.table

.wall

.window

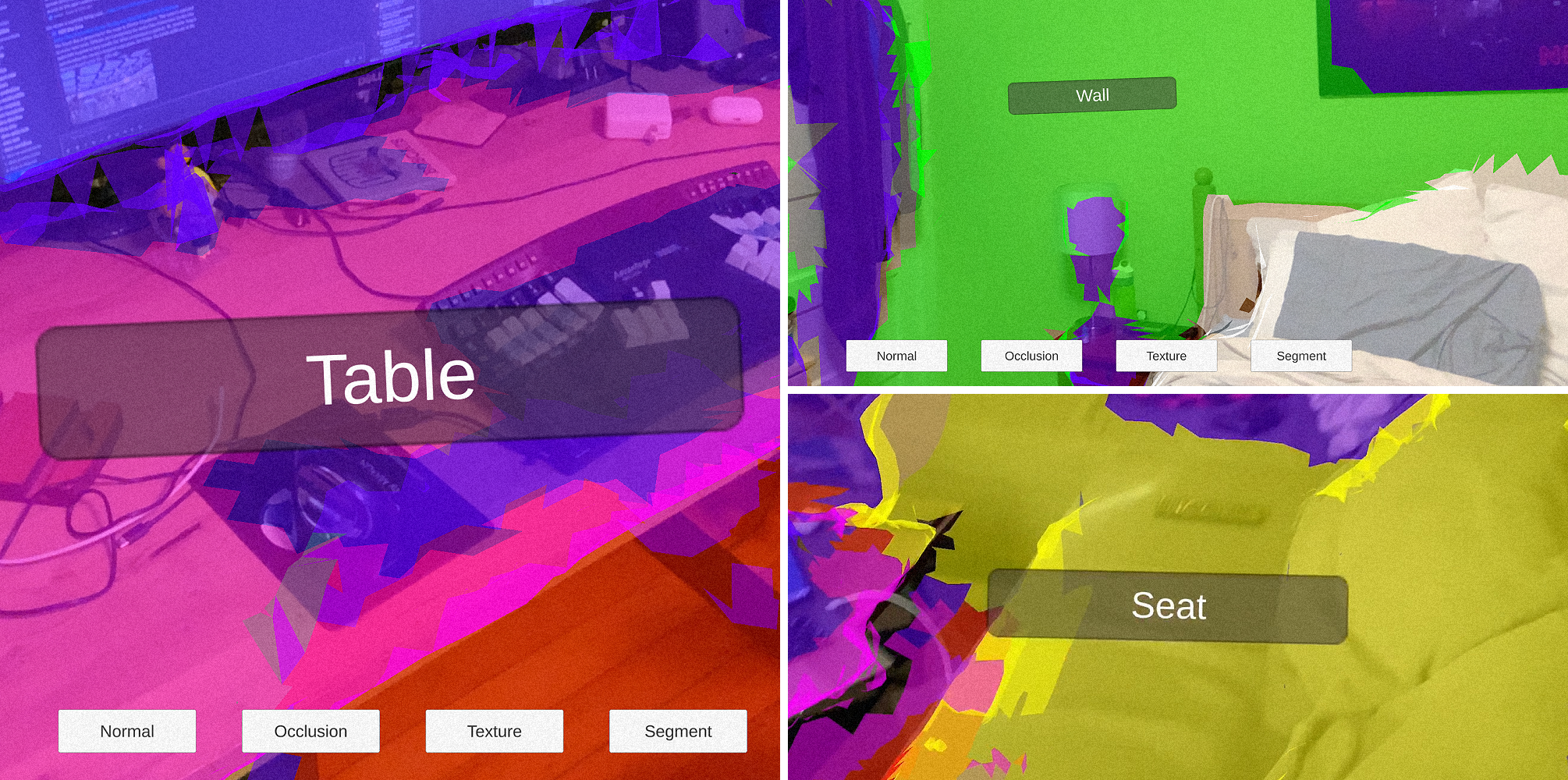

Build & iterate

Testing functionality

Visual & interaction polish

Finishing touches

Mobile AR UX

GOAL

Provide a flexible framework for developers to use when creating mobile AR apps to help onboard users

CHALLENGES

• Supporting a wide range of platform features

• Create a usable and extensible API

• Triggering animations sequentially based on runtime data

A UI / UX framework for providing guidance to the user for a variety of different types of mobile AR apps. The framework adopts the idea of having instructional UI shown with an instructional goal in mind. Built in close collaboration with designer Vishnu Ganti. The framework is available to use as part of the AR Foundation Demos repository.

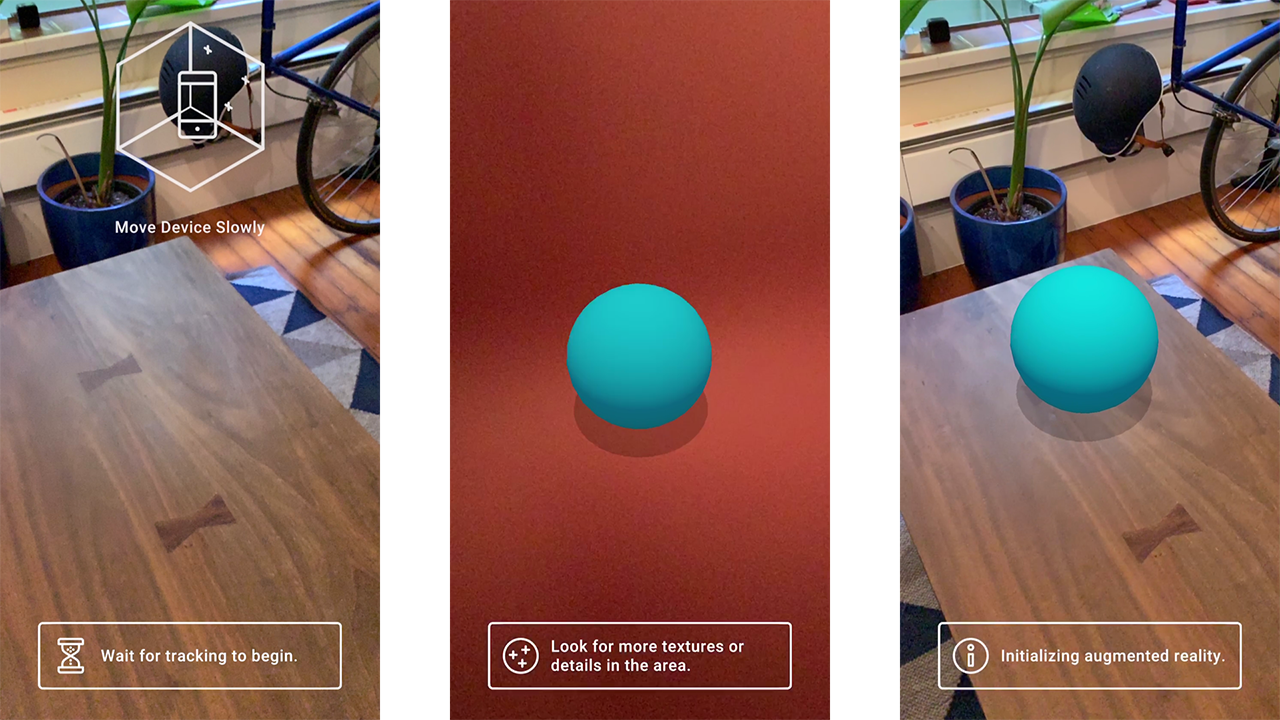

How it works

Each onboarding animation has a supported goal that checks the AR Foundation trackable managers to complete the goal when a set number of trackables are found. The goals are stored as lambda bool functions.

bool PlanesFound() => m_PlaneManager && m_PlaneManager.trackables.count > 0;Any app can setup n number of goals that appear sequentially. A common setup is to first instruct the user to find a plane and then tap to place an object. The goals are stored in a Queue and invoked until they return true.

if (m_UXOrderedQueue.Count > 0 && !m_ProcessingInstructions)

{

// pop off

m_CurrentHandle = m_UXOrderedQueue.Dequeue();

// exit instantly, if the goal is already met it will skip showing the first UI and move to the next in the queue

m_GoalReached = GetGoal(m_CurrentHandle.Goal);

if (m_GoalReached.Invoke())

{

return;

}

// fade on

FadeOnInstructionalUI(m_CurrentHandle.InstructionalUI);

m_ProcessingInstructions = true;

m_FadedOff = false;

}

The framework allows for goals to be dynamically added at runtime through the following API.

m_UIManager = GetComponent();

m_UIManager.AddToQueue(new UXHandle(UIManager.InstructionUI.CrossPlatformFindAPlane, UIManager.InstructionGoals.FoundAPlane));

The framework also supports Tracking Reasons which provide the user data about why tracking is lost or not working. AR Foundation abstracts some of these reasons as they are slightly different between ARKit and ARCore.

There's localization support through Unity's localization package for the following languages:

• English

• French

• German

• Italian

• Spanish

• Portuguese

• Russian

• Simplified Chinese

• Korean

• Japanese

• Hindi

• Dutch

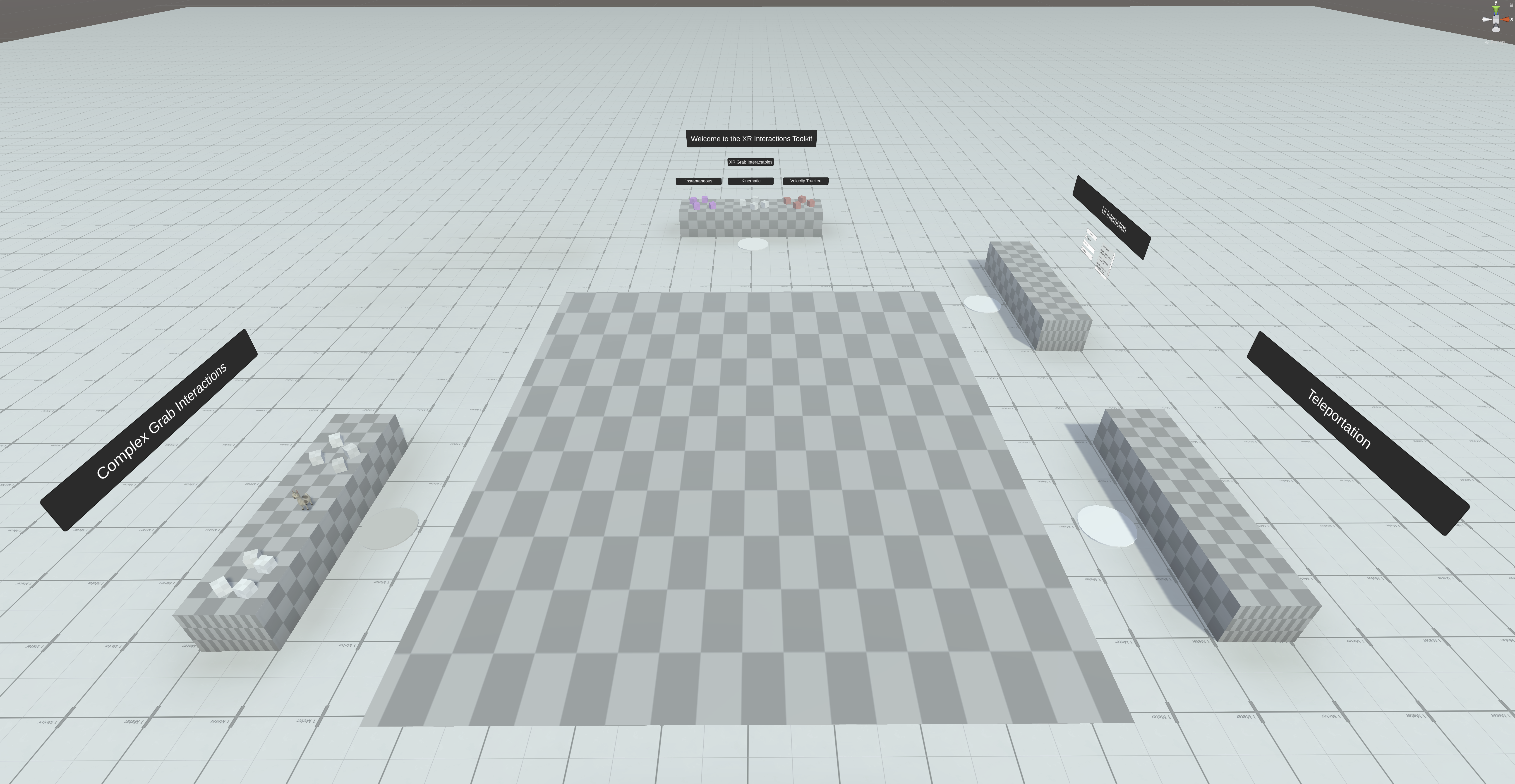

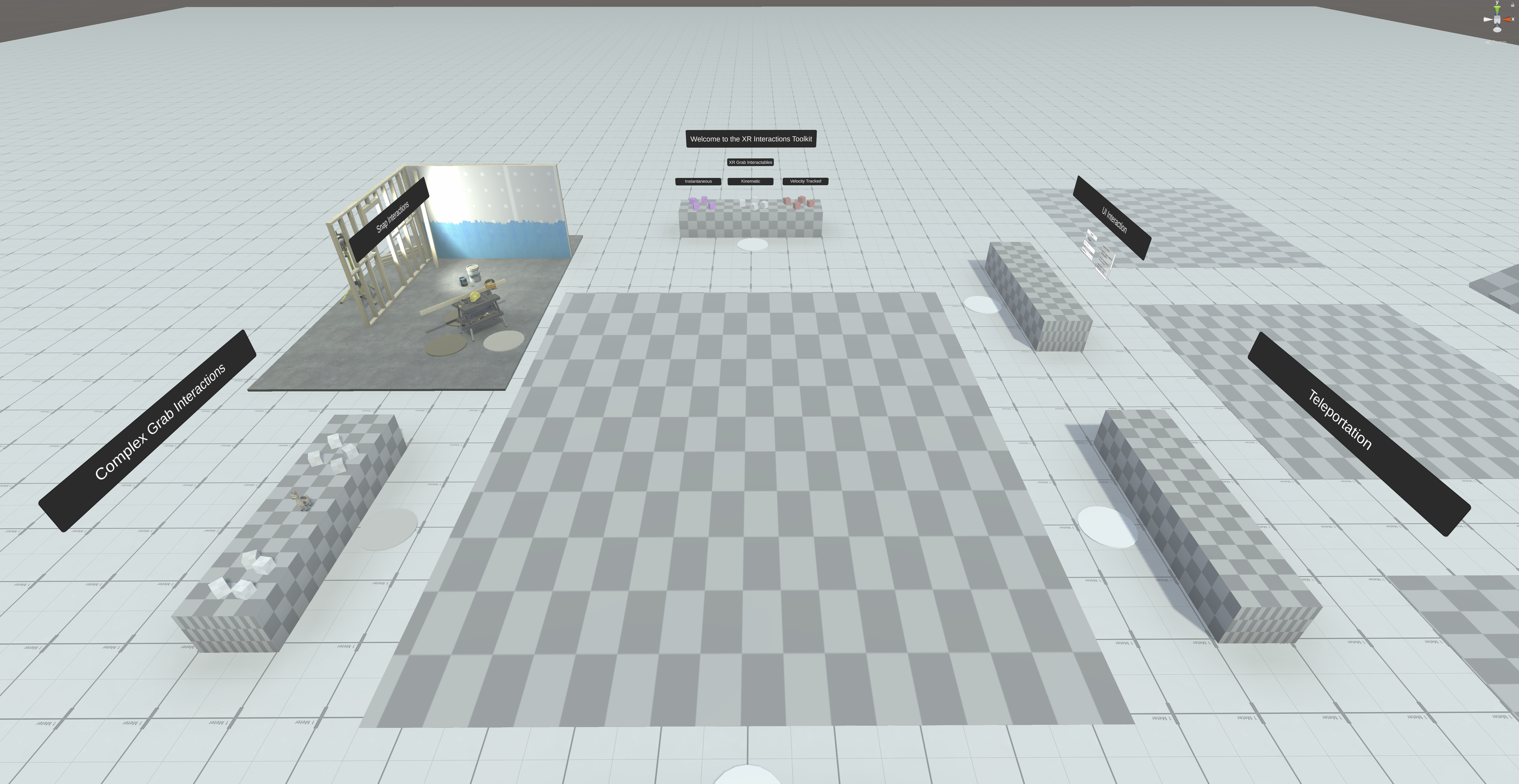

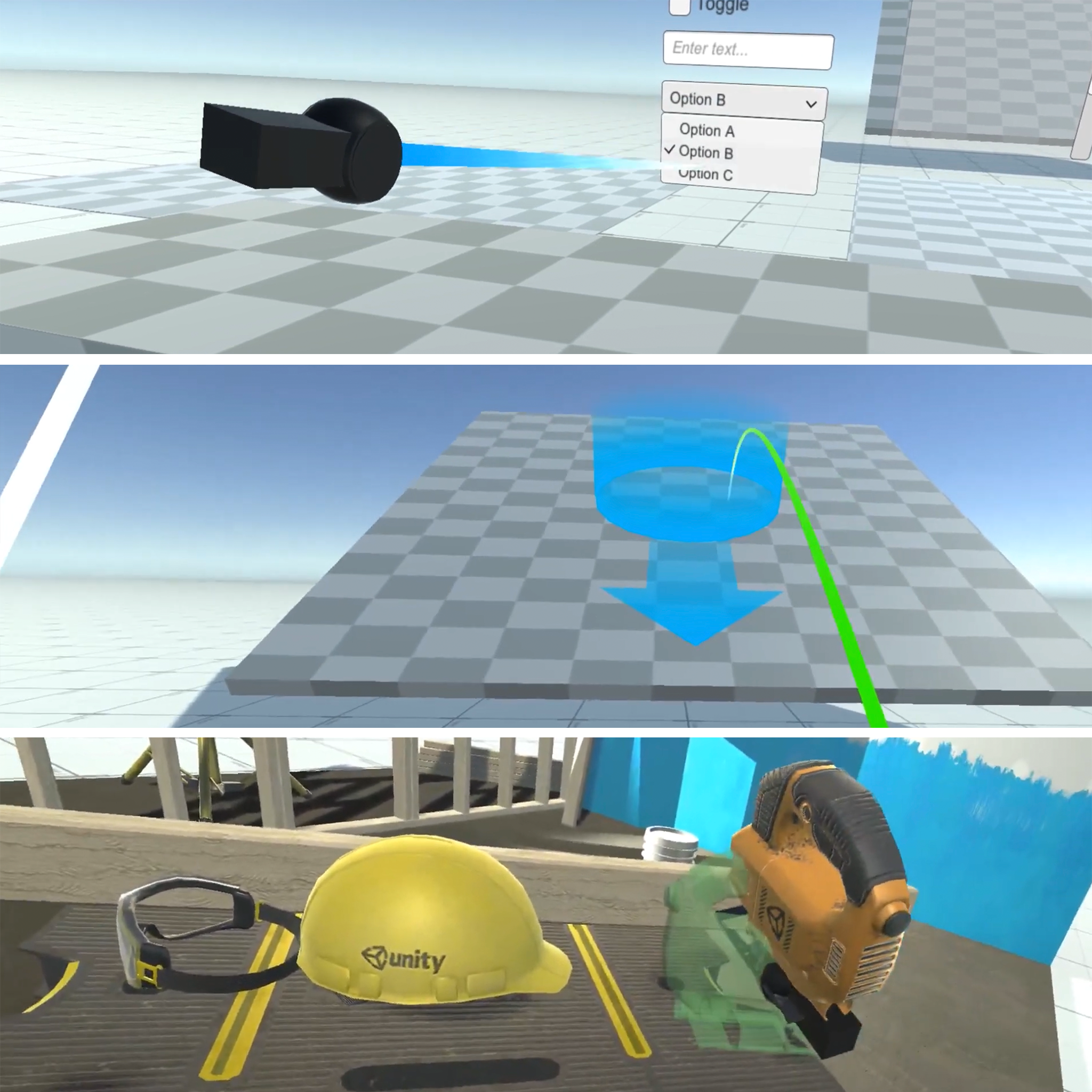

XR Interaction Toolkit sample

GOAL

Showcase a brand new interaction framework for VR

CHALLENGES

• Ambiguity around project outcome

• Short timeframe

• Framework still in active development

I built the original sample project for the XR Interaction toolkit. The goal of the sample project was to showcase spatial interactions, basic features and how to extend existing systems for custom logic and input.

Process

User Experience Goals

• Let users explore the space on their own

• Isolate features / capabilities to separate stations

• Show complexity with clear feedback and visuals

• Easy to use and understand at the project and scene level

Development

• Consider existing toolkits and visual elements

• Begin with simplest features

• Show complex features and extensibility

Start with the basics: physics grab

The XR Rig starts at the physics grab station. It has three sets of blocks that show different configurations for rigidbody objects that are grabbable.

Simple interactions

2D UI

• Ray based interactions with clear visual feedback

• Showcases all the unique 2D UI elements

Locomotion

• Teleport anchors with orientation options

• Support for tilted surfaces

Snap Interactions

• Sockets with clear visual feedback

• Objects with grab positions and orientations

Complex interactions

Goals

• Showcase how to build custom grabables

• Simple interactions with layered visuals

• Common interaction use-case demoed in a fun and engaging way

Turntable renderer

Built a Unity Editor tool for outputting videos and image sequences of assets rendered in real-time. It leverages the Unity Recorder to configure and output a wide range of file formats and resolutions. The full documentation and repository are available on GitHub. The tool was used to capture turntables of the characters for Neon White using a custom shader from the game for Patrick Hillstead's Art Station portoflio.